Insurance Claim Prediction using Logistic Regression

The increased cost of health insurance is alarming throughout the world. These costs are done for consumers and employers sponsored health insurance premium which has increased by 131 percent over the last decade. A major cause of this increase is payment errors made by the insurance companies while processing claims. Furthermore, because of the payment errors results in re-processing of the claims which is known to be called as re-work and accounts for significant portion of administrative cost and services issues of health plan which have a direct impact in the term of monetary of the insurance company paying more or less than what it should have. The most successful kind of machine learning algorithms is those that automate a decision making processes by generalizing from known examples.

Insurance Companies apply numerous models for analyzing and predicting health insurance cost. Some of the work investigated the predictive modeling of healthcare cost using several statistical techniques. Machine Learning approach is also used for predicting high-cost expenditures in health care.

In this project, we will discuss the use of Logistic Regression to predict the insurance claim. We take a sample of 1338 data which consists of the following features:-

- age : age of the policyholder

- sex: gender of policy holder (female=0, male=1)

- bmi: Body mass index, providing an understanding of body, weights that are relatively high or low relative to height, objective index of body weight (kg / m ^ 2) using the ratio of height to weight, ideally 18.5 to 25

- children: number of children/dependents of the policyholder

- smoker: smoking state of policyholder (non-smoke=0;smoker=1)

- region: the residential area of policyholder in the US (northeast=0, northwest=1, southeast=2, southwest=3)

- charges: individual medical costs billed by health insurance

- insuranceclaim – The labeled output from the above features, 1 for valid insurance claim / 0 for invalid.

Here is the sample dataset:-

Now we will import pandas to read our data from a CSV file and manipulate it for further use. We will also use numpy to convert out data into a format suitable to feed our classification model. We’ll use seaborn and matplotlib for visualizations. We will then import Logistic Regression algorithm from sklearn. This algorithm will help us build our classification model. Lastly, we will use joblib available in sklearn to save our model for future use.

import pandas as pd import numpy as np import seaborn as sns import matplotlib.pyplot as plt from sklearn.linear_model import LogisticRegression

We have our data saved in a CSV file called insurance.csv. We first read our dataset in a pandas dataframe called insuranceDF, and then use the head() function to show the first five records from our dataset.

age sex bmi children smoker region charges insuranceclaim 0 19 0 27.900 0 1 3 16884.92400 1 1 18 1 33.770 1 0 2 1725.55230 1 2 28 1 33.000 3 0 2 4449.46200 0 3 33 1 22.705 0 0 1 21984.47061 0 4 32 1 28.880 0 0 1 3866.85520 1

Let’s also make sure that our data is clean (has no null values, etc).

insuranceDF.info()

<class 'pandas.core.frame.DataFrame'> RangeIndex: 1338 entries, 0 to 1337 Data columns (total 8 columns): age 1338 non-null int64 sex 1338 non-null int64 bmi 1338 non-null float64 children 1338 non-null int64 smoker 1338 non-null int64 region 1338 non-null int64 charges 1338 non-null float64 insuranceclaim 1338 non-null int64 dtypes: float64(2), int64(6) memory usage: 83.7 KB

Let’s start by finding correlation of every pair of features (and the outcome variable), and visualize the correlations using a heatmap.

corr = insuranceDF.corr() print(corr)

age sex bmi children smoker region \

age 1.000000 -0.020856 0.109272 0.042469 -0.025019 0.002127

sex -0.020856 1.000000 0.046371 0.017163 0.076185 0.004588

bmi 0.109272 0.046371 1.000000 0.012759 0.003750 0.157566

children 0.042469 0.017163 0.012759 1.000000 0.007673 0.016569

smoker -0.025019 0.076185 0.003750 0.007673 1.000000 -0.002181

region 0.002127 0.004588 0.157566 0.016569 -0.002181 1.000000

charges 0.299008 0.057292 0.198341 0.067998 0.787251 -0.006208

insuranceclaim 0.113723 0.031565 0.384198 -0.409526 0.333261 0.020891

charges insuranceclaim

age 0.299008 0.113723

sex 0.057292 0.031565

bmi 0.198341 0.384198

children 0.067998 -0.409526

smoker 0.787251 0.333261

region -0.006208 0.020891

charges 1.000000 0.309418

insuranceclaim 0.309418 1.000000When using machine learning algorithms we should always split our data into a training set and test set. (If the number of experiments we are running is large, then we can should be dividing our data into 3 parts, namely – training set, development set and test set). In our case, we will also separate out some data for manual cross checking.

The data set consists of record of 1338 patients in total. To train our model we will be using 1000 records. We will be using 300 records for testing, and the last 38 records to cross check our model.

dfTrain = insuranceDF[:1000] dfTest = insuranceDF[1000:1300] dfCheck = insuranceDF[1300:]

Next, we separate the label and features (for both training and test dataset). In addition to that, we will also convert them into NumPy arrays as our machine learning algorithm process data in NumPy array format.

trainLabel = np.asarray(dfTrain['insuranceclaim'])

trainData = np.asarray(dfTrain.drop('insuranceclaim',1))

testLabel = np.asarray(dfTest['insuranceclaim'])

testData = np.asarray(dfTest.drop('insuranceclaim',1))As the final step before using machine learning, we will normalize our inputs. Machine Learning models often benefit substantially from input normalization. It also makes it easier for us to understand the importance of each feature later, when we’ll be looking at the model weights. We’ll normalize the data such that each variable has 0 mean and standard deviation of 1.

means = np.mean(trainData, axis=0) stds = np.std(trainData, axis=0) trainData = (trainData - means)/stds testData = (testData - means)/stds

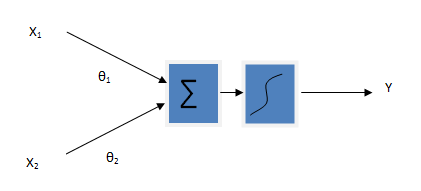

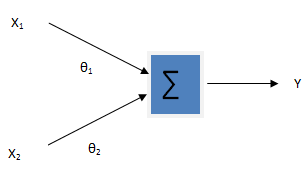

We can now train our classification model. We’ll be using a machine simple learning model called logistic regression. Since the model is readily available in sklearn, the training process is quite easy and we can do it in few lines of code. First, we create an instance called insuranceCheck and then use the fit function to train the model.

insuranceCheck = LogisticRegression() insuranceCheck.fit(trainData, trainLabel)

Now use our test data to find out accuracy of the model.

accuracy = insuranceCheck.score(testData, testLabel)

print("accuracy = ", accuracy * 100, "%")OUTPUT:- accuracy = 85.66666666666667 %

Here is the final code of the project for “Insurance Claim Prediction using Logistic Regression”

# Load essential libraries

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.linear_model import LogisticRegression

from sklearn.externals import joblib

# Read CSV

insuranceDF = pd.read_csv('insurance.csv')

print(insuranceDF.head())

insuranceDF.info()

corr = insuranceDF.corr()

# Train Test Split

dfTrain = insuranceDF[:1000]

dfTest = insuranceDF[1000:1300]

dfCheck = insuranceDF[1300:]

# Convert to numpy array

trainLabel = np.asarray(dfTrain['insuranceclaim'])

trainData = np.asarray(dfTrain.drop('insuranceclaim',1))

testLabel = np.asarray(dfTest['insuranceclaim'])

testData = np.asarray(dfTest.drop('insuranceclaim',1))

# Normalize Data

means = np.mean(trainData, axis=0)

stds = np.std(trainData, axis=0)

trainData = (trainData - means)/stds

testData = (testData - means)/stds

insuranceCheck = LogisticRegression()

insuranceCheck.fit(trainData, trainLabel)

# Now use our test data to find out accuracy of the model.

accuracy = insuranceCheck.score(testData, testLabel)

print("accuracy = ", accuracy * 100, "%")Download the dataset here:- here